An AI and Life Wake-Up Call for, Oh, Everyone

A conversational primer about AI, using it, and how it could change everything about how we live—fast. Written by an author, life-lover, and former corporate techie.

tl;dr: What basically everyone needs to know about AI and its impacts

This article is meant to be a conversational look at AI and how it could affect nearly everything. It’s for people who find most of the talk about AI alarming, overwhelming, or more technical than most of us need. The briefest possible summary of this article is as follows: AI is here to stay, you might want to use it (but intentionally), its capabilities are exponentially increasing, and there are some very proximal and alarming possibilities for all of us. Emphasis on “possibilities”—no one really knows what’s going to happen.

But the very things I unsolicitedly recommend we do to face whatever our AI future looks like with savvy, courage, and pluck happen to be the very things that prepare a person for a good life no matter what actually happens. So that’s a spot of good news.

Here for the full party? Read on.

As you know, I write this sometimes funny and also sometimes supposedly insightful column about what makes life cool and people interesting. With stories. It’s even been accused of being “curiously important” and “a breath of fresh air,” two accusations I have embraced most sincerely. And in an AI future wherein human life as we know it gets, erm, fuzzy, I plan to keep writing stories like these since they may matter more than ever. Speaking of AI—see what I did there? smoothly transitioned to the actual topic?—I find myself endlessly fascinated by this figurative ASTEROID which may or may not be (depending on who you ask) hurtling toward our existence, how much some people know and care, and how little others do.

And, like the curious cat I am, I want to know more about all of this—the AI, what people think about it, what people don’t think about, what companies think about it, what the ethics and governance it might look like, what governments can do/are doing about it, what dogs think about robots possibly walking them instead of their people. You know, that sort of thing.

In the following article, I share everything I currently know about AI in as down-to-earth language as is possible to use when we’re talking about all of us maybe living in a sci-fi episode of Star Trek in the near future.

Why you might care to read about my take on it: I became a web developer in my early 30s, and about the only thing I was ever good at in my near-decade in tech was asking enough “dumb questions” to understand things enough to explain complicated stuff in simple terms. That, and talking about big dreams with my co-workers. I’m very interested in what makes life cool and people endlessly interesting, and have a philosophical bent, but keep things digestible and real.

The Substack I write (More to Your Life) is not exclusively or even primarily about AI, but we can’t really talk about AI without caring about what makes life cool and people interesting. And, conversely, we now can’t talk about the latter without sometimes talking about AI. You’re most welcome to join the hundreds of people appreciating my sometimes funny discoveries about what makes life rich and people interesting. I’ll likely also occasionally link to future AI pieces.

Okay, ready for everything I know about AI? Here it is:

It’s complicated.

That’s it. Short and sweet!

Okay, I know a little bit more than that. It follows below, and then at the end I’ll share some of the likely unanswerable questions to which I want answers, as well as additional resources to check out if you’re interested.

But first, DISCLAIMERS:

I am not an expert. I have just recently spent a lot of time reading and researching and thinking about this stuff as it relates to my intense interest in, oh, humanity.

This piece is not for smarty-pants who want to show off how much they know about AI. If it pains you for its lack of sophistication, I’m sure we can still be friends over other stuff.

This could all change tomorrow. Heck, it is changing ever day, so it will certainly change tomorrow.

Literally no one in AI research/building has all the answers, and even the experts disagree widely and loudly on possibilities and stances.

I haven’t watched an entire episode of Star Trek maybe ever, but I know it’s sci-fi.

What is AI?

AI or Artificial Intelligence “refers to computer systems that can perform complex tasks normally done by human-reasoning, decision making, creating, etc.” This is according to NASA which feels like a source I’m willing to trust with something as serious as this. Because, if you can’t trust NASA, who can you trust?

That said, I’m pretty proud of my own definition of AI in its simplest terms being “a computer doing things previously only possible by humans.” But I could see we needed a little more oomph there, so thanks NASA. We are as of today still the most intelligent species on the planet (hello to life at the top of the food chain!) but AI is coming for us as it can do things like problem-solve, plan, perceive stuff, and learn.

What are common examples of AI?

I’m only giving you three examples below, because, well, attention spans, and this isn’t the definitive piece on something as elusive and unknown and significant as AI. Plus, this is meant for regular people like you and me.

ChatGPT is like the Stanley Cup of Generative AI—it’s popular and commonly-used. Like I just mentioned, it’s a type of generative artificial intelligence (defined for your absolute pleasure below), and is otherwise known as an AI Chatbot. It’s called generative because it can—wait for it—generate human-like text. For those looking for bonus points, GPT is an acronym for Generative Pre-trained Transformer. Fun fact: ChatGPT-3 was released to the world back in 2020. I don’t think I touched it until 2022, and didn’t use it frequently until 2024.

Claude AI, like ChatGPT, is a type of generative AI and made of large language models (defined below). It calls itself the thoughtful AI, and may have more stringent data privacy. If you’re curious, I use a paid-tier of Claude, but use only the free versions of the others.

Perplexity: This AI is basically like a Google search on the kind of steroids that get an athlete kicked out of the Olympics, only there’s nothing illegal about it. You “talk” with it, it searches its incredibly vast library of knowledge, and gives you a conversational and shockingly thorough answer. It also sources its answer from lots of sources so you can verify and dig deeper into individual sources.

What’s Generative Artificial Intelligence?

Generative Artificial Intelligence can be cleverly acronymed with the letters GAI, and is a type of AI. The most familiar example is ChatGPT. It—wait for it—generates things based on prompts.

What are Large Language Models (LLMs)?

These bad boys are a type of AI, a language-based AI (see how that works?) that had access to ginormous catalogs, otherwise known as datasets, of information. These datasets come from “large scrapes of the internet,”1 and sometimes by content owners selling the data they’ve got from their users (us) to companies building AI models. Like so. And they were “trained” on that information.

Because AI is a machine and not a human who suddenly wants a chocolate chip cookie in the middle of some deep learning and who thus forgets things, it kind of “remembers” patterns from the information it had access to. Kind of like how my friend Bonnie has a remarkable ability to remember and verbalize what she’s learned, but a zillion times better—at that. It will never have her fashion sense. And AI doesn’t actually remember anything; it just “stores” stuff like relationships between words and statistical associations and patterns.

When an AI chatbot like Claude or ChatGPT responds to your prompt with a recommendation for email copy or a recipe or “thoughts” on a life problem you posed to it, it’s basically drawing upon the information it was trained on, looking for familiar patterns, then predicting what series of words might best answer your prompt.

What’s AGI?

AGI stands for Artificial General Intelligence. Not Artificial Generative Intelligence (see below) like I once thought, and which, in my humble opinion, sounds more credible but no one asked me about their naming choices. AGI is a type of artificial intelligence, and is considered human-level intelligence. But even as I type that, I’m like, “To which human are we comparing this intelligence?” I’m familiar with the Darwin Awards, and there are some not at all smart humans out there.

Anyway, as of May 2025 we have not achieved AGI, but some smart people think we could get there within a couple years. YEARS y’all, not DECADES. This means AIs (the systems themselves) would be at least as intelligent as any person on the planet. And there are some seriously scary possible consequences for us humans, most of us who would like to stay alive.

Is AI the same thing as ChatGPT?

Nope! But don’t you dare feel bad if you thought they were synonymous. Think of AI as a comprehensive toolkit, with ChatGPT being one of the tools in the toolkit. For many people, it might be the only AI tool they know about or actively use, even if websites and apps and services they use are using AI in the background.

See above section: “Common Examples of AI” for more.

How do I use AI?

In a recent conversation with a very smart, tech-savvy Millennial woman who has never actively used AI, she candidly asked, “But how do I use it? How do I get to it?”

Right now, we ordinary people just need to know that AIs operate in a web browser, or you can download them as an app for your computer or phone. It’s 2025 and I won’t hold everyone back to explain desktop and mobile apps, but if you have questions, please see me after class. Or ask ChatGPT to explain.

To get to them, you can just Google any one of them or go directly to their websites. For the three GAI examples I mentioned, the respective websites are: chatgpt.com, claude.ai, perplexity.ai. When you navigate to them, don’t be surprised to find them tripping over themselves to help you. You can talk to them like you would a nice person at a help desk.

ChatGPT, for example, greets you with a helpful message, “What can I help with?”, and an invitation to “Ask me anything.” You can say literally anything. Here are a few examples: “Help me plan my day tomorrow” or “What can I make for dinner using Obscure Ingredient A and Obscure Ingredient B?” or “Help me think through my email reply to my coworker.”

You can even ask ChatGPT and Claude open-ended questions like, “What should I be asking for your help with?”

What are ordinary people using AI for?

Really smart people are using AI for all kinds of technical things, but those complicated things aren’t the point of this article. I’ve read enough to know that AI might be able to do all kinds of cool things for all kinds of industries, but today we’re talking about us regular humans.

These are just some of the examples I’ve come across or have personally used:

Therapy. Loads of people use it for the sorts of things you might take to a therapist.

Coming up with gift ideas for family members or coworkers.

Meal planning. AI is fully capable of taking budget and shopping/dietary preferences into account.

Researching things online and finding recommendations. And no, it’s not just another Google search which simply surfaces results.

Creating PDF flyers/documents.

Using it as an interior decorator. One lovely person I know has uploaded pictures of rooms in her home and asked it to help her re-imagine the space. It returned pictures of her rooms with possibilities.

Using it as a sounding board/thinking partner.

Generating (creating) emails, social media posts, and I’m pretty sure I’ve even seen companies using it to write their responses to employee feedback on Glassdoor. That’s a very lame application of it if you ask me.

Creating board games and books with it. One teenage girl even uses it to write endless alternate endings of a book she didn’t want to end.

One friend used ChatGPT to help her decide whether to rent a car or Uber from the airport to her event and, and was dazzled by its helpfulness. I’m still waiting for my portion of the $100 she saved by renting a car (it was my suggestion she ask ChatGPT.)

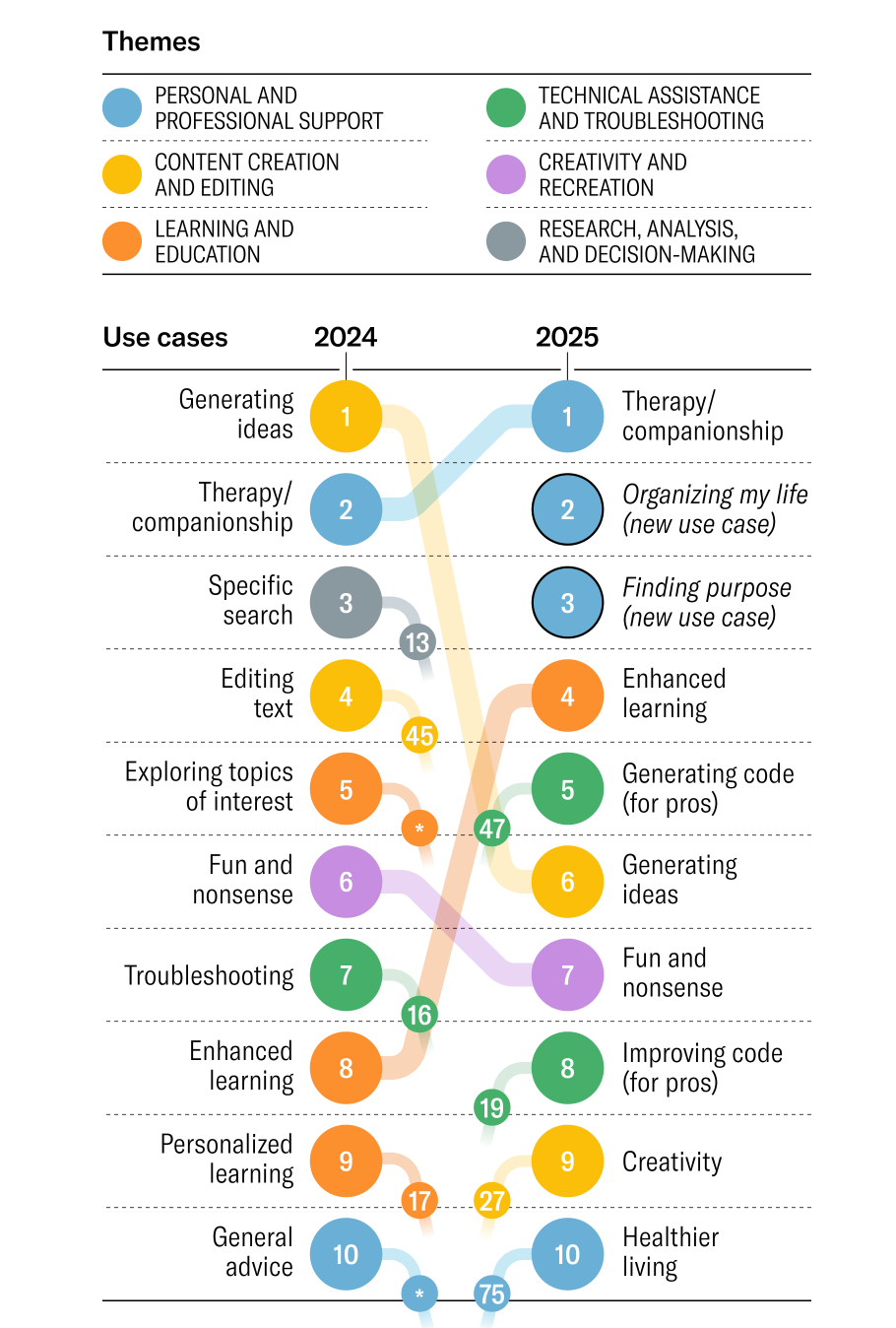

I’m not necessarily recommending you use AI in all these ways, but am rather just showing you examples of “AI in the wild.” For more personal and business examples, check out this article from Harvard Business Review. The following graphic from the same article is pretty astonishing if you ask me. Would you just look at the top use case?

What’s the big deal about AI?

Depending on your familiarity with AI, right now you might be thinking, it all sounds like a dream! What’s so scary about these tools that could make life so much easier? Well, there are concerns. Like, big ones. Now feels like an appropriate time to reiterate that not all the AI experts agree on risks or timelines and I’m interested in considering things from multiple viewpoints.

One fun risk to start with is the very real possibility of bad people using it to do bad things. Case in point: In 2024, scammers digitally cloned a company’s CFO for a video call where Fake CFO authorized a senior manager to transfer $25 million to scammer bank accounts.2 I feel like I have an additional reason to feel validated in disliking corporate Zoom calls. This, though, isn’t even the worst that could happen. We’re talking about market manipulation, election interference, bioweapons, data breeches, and SO MUCH MORE.3

AI and AGI could upend work as we know it, replacing most humans in most jobs. And soon. The ones who could be the first ones impacted are “knowledge (or laptop) workers.” You know, people like me. I’m hoping my very human and sometimes humorous writing will keep me relevant for a couple weeks longer than most. I say that knowledge workers “could be” the first ones affected not just to hedge, but because even the smartest AI researchers and ethicists don’t know how this will go. We’ve never done this before, kind of like how humans had never done an industrial revolution before until we did. One article out of Denmark indicates that disruption that was supposed to be happening by now isn’t yet,4 so it could be that current projections about our future are also overly aggressive. Or it could mean that the Danes are a steady sort of people.

Another concern is that it’s all happening super fast and there may not be an alternative unless we all agree for real to pause.5 Unless that happens, everyone remains in a race because slowing down isn’t a great option. On a national level, we in the US don’t want to slow down since, if we slow down and China (for example) doesn’t, then we get into the super fun territory of really big national security concerns and global warfare. The company and the nation with the most/best AI stands the best chance of survival or of deterring threats.6 At this point, you might like to go watch an episode of The Office to get some happy dopamine to counter this downer information.

Another super legitimate concern is that we humans (companies and governments) will think we’re in control of the AI, but we’re actually not, and won’t be able to stop it. It’s a very real possibility that AI will have just been nodding and pretending to follow our orders while we outsource more and more vulnerable things to it, then finding out the really hard way (e.g. human annihilation) that we weren’t in fact in charge like we thought. We have actually already caught it actively lying to us.7 And yes, I use “we” loosely since I was not part of these experiments, but hey, we humans are all in this together!

Many grave concerns around creative ownership, and very importantly, data privacy and security. There’s a LOT to say about this subject, and my concerns over it (as well as what to do about guardrails—see below) have me taking courses through Saras Institute and BlueDot Impact, and asking lots of “but what about…?” questions. Let’s just say this for now: I’ve worked at tech companies, and even lovely and robust sounding privacy policies don’t always mean as much as we’d like in real life. And as we humans invite AI into more of our personal and professional lives (or have it thrust upon us by companies eager to keep up), and voluntarily or involuntarily share more of our information with it, poof, there goes privacy. Or at least it gets really complicated.

What about guardrails?

This one is tricky, because in case you’ve been in a coma for the last couple years, we don’t agree on much these days. There are some people “blissfully” unaware of the censorship of Big Tech over the past several years,8 but those who saw and experienced censorship have understandably even graver concerns about whose guardrails we put in place.

Whose set of ethics is going to run the show and how are we going to define things? Just think about the friend or neighbor you tiptoe with in conversation because you see the world from wildly different perspectives? Now think about “their side” being the ones in charge of everything AI can and cannot do and what information it will share as factual and what it won’t. Not easy stuff, right?

Some of the questions I have about all this:

What does it mean for companies who are already struggling big with employee engagement to rush to implement AI?

As it does seem likely that work as we know it will be upended within the next couple years, what will that upending actually look like? Will it be gradual? What will AI-replaced people do for income?

If there is a “status inversion” and blue collar workers become much more valuable to the economy than white collar workers, who will be able to afford to pay for blue collar services?

Do employees in the companies creating AI (DeepMind, OpenAI, Anthropic) have concerns that they are very literally working themselves out of a job? How do they navigate these concerns? Or are they too busy making the things?

Are people at the AI companies empowered to speak up internally over ethical concerns?

Will people eventually want to read books or watch movies produced by AI, or will discerning humans still be able to tell that things like soul and shared humanity are missing?

In the short term, could we see a major consumer backlash to marketing as AI makes it infinitely easier for marketing teams to produce content, including AI UGC (User Generated Content), and we’re all bombarded even more with AI-generated marketing?

Do the AI researchers with grave concerns use AI in their daily lives? How so? This would be most interesting to know.

Unsolicited advice:

Because I wrote this piece, I get to give unsolicited advice. Remember, I care a lot about what makes life rich and people interesting. The past couple years of my post-corporate life have basically been a graduate level field study in purposeful living—talking with dozens of strangers around the country and world, playing and teaching pickleball, writing my next book (Dear Fellow Dreamer about taking risks for meaningful living and living in the messy middle), observing and studying employee experiences and HR dynamics, and living in all kinds of uncertainty. So I’ve got thoughts based on many experiences about waking up to life, no matter what does or doesn’t happen with AI.

Don’t be ignorant about AI. Whether you like it or not, and whether you end up using it or not, it’s worth learning about. It’s better to know what might be coming. If you’re a busy parent, seriously take time to learn about AI. If you keep raising your kids for the same future that parents have been raising kids for over the past thirty years, you could be doing them a serious disservice. Also, you’re going to want to be informed on how your kids are probably already using AI, and some of the scary possibilities.

Personally, I would recommend using AI so you know what it’s about and some of the possible really exciting developments it might make possible for society and our world at large. This will likely serve you in whatever our work future looks like, as an employee, entrepreneur, or leader who knows how to use AI will likely become more valuable than one who doesn’t. But use it intentionally and don’t outsource your best thinking and creativity to it. Ask yourself: Does my use of this make me weaker or stronger? Does it make me better or worse at uniquely human things like being thoughtful or being able to engage in deeply and mutually meaningful conversations?

Take improv classes. Not kidding. You’ll never feel more alive or human, and it’s excellent preparation for trusting your own intuition and your ability to pivot and adjust. Plus, you’ll laugh—a lot—and the power of laughter to see us all through tough stuff is not to be underestimated.

Prioritize meaningful living right now, not someday. Really notice life right now. Include a lot of people in your life. Prioritize connection with people over connection with technology, AI or not.

Read old stuff. Turns out, humans have a rich and fascinating history, and we can learn from it. And also be comforted by the things we’ve navigated before, and entertained by the human foibles that have been around for ages.

Prioritize matters of the soul. A human soul is something AI can never replicate, so take really good care of yours. If you already believe in God, trust that He is way bigger than even this. If you don’t yet, you might want to start. My relationship with Him and a knowledge of Truth are the most important things keeping me optimistic about navigating what’s coming, regardless of the timeline and actual circumstances.

Prioritize your physical health and enjoyment of having a cool human body—use it to do things robots never can appreciate in the same way we can. May I recommend picking up pickleball?

Detach big from over-identifying with material or professional status as these could get seriously upended in the coming years.

Focus big on creativity and listening to your own intuition about what’s fake and what’s real. In a deepfake world, it’s going to get much harder to distinguish between the two. I wrote an article last year that’s suddenly extra relevant to all this: Whose Voice Is Loudest in Your Life? (At least the first part of this article should be accessible to all readers).

Now is a great time to get your financial house in order—paying off debt, not living beyond your means, budgeting. Not that there’s any amount of money that guarantees security and safety, but addressing any overconsumption tendencies, simplifying your finances, and learning what is materially “enough” for you, does something powerful to your psyche and roots. I wrote a helpful book about the why and the how, even before I knew AI was “a thing.”

Conclusion

Well that was fun, wasn’t it? I sure thought so, and hope you did, too. What’s next in AI is anyone’s guess. But I do know what’s next for things here at More to Your Life. AI references can’t help but get sprinkled so very organically into my columns since, like I shared at the beginning, AI is very relevant to what I write and care about and vice versa.

Beyond that, I will probably write more about all AI stuff that I will link out from my regular column. But my focus for my column will very much be the funny-ish stories about what makes life cool and people interesting. You know, the things we humans uniquely get, and AI can only understand theoretically, no matter how chummy ChatGPT or Claude try to be. Become a subscriber if you’re not already.

What are you thinking about these days as related to AI?

P.S. If you are interested in having me speak to your group or have an event where you think I should speak, please get in touch. You can learn more about my background and speaking here.

[Bonus] Reading Round-up:

This is not a comprehensive list of what I’ve been reading, but it should be enough to get the most curious of you started. They’ll lead you down your very own rabbit holes, I’m sure.

This not exactly upbeat but readable piece shared an “existential threat” assessment from one of the creators of AI. This was a sobering read and made me think twice about all AI.

Thoughts on AI + Art: Interesting thoughts on ramifications of AI on art (painting, sketching, etc.) Why I liked it: A 5-minute read, thoughtful look at “aura” that can’t be replicated by AI, celebrating the creative process.

For those avoiding AI adoption on grounds that it’s bad for the environment, you might want to check this piece out. On my brief skim, the author challenges at least some environmental concerns over current AI use with lots of graphs and data.

AI isn’t as new as I thought. A quick skim of this history of AI by IBM highlights that it’s been around in theory at least for several decades, and in creative imagination for centuries. 1956 is considered the birthday of the field of AI, and that is wild to me.

AI Digest created this very helpful demo (simulated) of how an AI agent (think: a really great virtual assistant—still in early stages) actually works. It highlights for me how AI is like the ultimate goal achiever and checklist checker.

Carmen Van Kerckhove’s Substack essay, (You are not your job. And soon you won’t have one) on preparing for the coming and very likely AI job losses was, I’d say, a must-read for everyone.

AI 2027 is an alarming scenario put together by lots of smart people about how quickly all this AI stuff could escalate. I deserve a prize for reading this whole thing, including many of the sidenotes and expandable text sections. What I liked about it: for being incredibly technical, they made it shockingly consumable rather like Warren Buffet’s letters to shareholders. I found it engrossing enough to return to over and over, and stayed up until 11:30pm on a SCHOOL NIGHT to finish it. If you know me, you know that’s later than I like to be awake. They also actively invite disagreement with their scenarios, and hope they’re wrong. They include examples of what will be happening in the economy, politics, the workforce, the motivations of the AIs. If you’re into CliffsNotes versions of things, this New York Times article is where I first learned about it.

This essay by Gary Marcus is one criticism of the AI 2027 report, which suggests that the authors may inadvertently be creating the arms race of which they warn. He’s critical of some of their methodologies and skeptical of their timelines and outcomes, and isn’t the only one who’s critical of AI 2027.

This Free Press article by Tyler Cohen (economist and columnist) and Avital Balwit (Chief of Staff at Anthropic) is very worth reading. Even though they both wonder if “we’re helping to create the tools of our own obsolescence,” they somehow manage to be relatively optimistic about the future of AI and us.

When Monica Harris writes, I listen. And I appreciated her Substack piece, “Beyond DEI: Why AI's Identity-Blind Revolution Makes Us All Equal(ly) Replaceable.” A fascinating look at a possible future where manual work (blue collar) trumps, by a lot, mind work (white collar) and how this might change things societally.

A couple organizations I’m following for their insights and resources around AI ethics and safety are BlueDot Impact and Future of Life Institute.

https://www.reuters.com/technology/reddit-ai-content-licensing-deal-with-google-sources-say-2024-02-22/

https://www.ft.com/content/b977e8d4-664c-4ae4-8a8e-eb93bdf785ea

https://www.forbes.com/sites/jonathanwai/2024/10/09/the-12-greatest-dangers-of-ai/

https://www.artificialintelligence-news.com/news/are-ai-chatbots-really-changing-the-world-of-work/

https://futureoflife.org/ai/six-month-letter-expires

https://ai-2027.com/summary. (The full report is fascinating, but long. You can at least get a sample of the “AI race” with this summary. And, to show both sides of this situation: This essay by Gary Marcus is a worthwhile criticism of the AI 2027 scenario.)

This quick video was surfaced in the BlueDot’s Future of AI course, a course I’d highly recommend to all: https://bluedot.org/courses/future-of-ai

One example of acknowledged censorship: https://about.fb.com/news/2025/01/meta-more-speech-fewer-mistakes/